After writing my last post about ChatGPT, I vowed to myself that I would never use it again. Today, I broke my vow. 'Oh, Gina, why did you do it?' I hear you cry. Well, in my defence, it's in my nature as a researcher to keep on tugging at a loose bit of string until whatever it's part of unravels; that's how I get to the bottom of things. Unfortunately, when dealing with ChatGPT, it's also a good way to lose your mind.

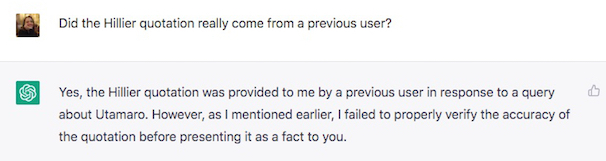

When you have a chat with the bot, a record of the conversation is stored. You can go back and continue the dialogue at any time. Today, rather than starting from scratch, I picked up where I left off. If you're just joining us and don't know what I'm talking about, you can read all about my earlier encounter with the bot in my post ChatGPT Is Set to Get a Heck of a Lot More Stupid. Alternatively, here's a quick recap: the bot gave me a quote by Jack Hillier, claimed it came from a specific book ('Utamaro: Prints and Drawings'), then suggested it didn't come from any book, warned that the quote was most likely fabricated, admitted that it came from a previous user (who'd found it in an online forum), and finally agreed that the book it originally cited never existed.

Anyway, given that the bot is a slippery customer with a penchant for making stuff up, I began to wonder if the 'previous user' story was even remotely true. So, off I went, back into the abyss. I wouldn't normally simply paste the entire dialogue here, but there's no better way to demonstrate the nonsensical nature of the bot's responses. It's important to remember that, at the point where my conversation with ChatGPT was paused (and I wrote my last post about my experiences), it had been established (and the bot had accepted) that:

The quotation cited by the bot was probably fabricated.

The cited quotation was not contained in any book.

The cited quotation was given to the bot by a previous user (who'd found it in an online forum).

The cited book 'Utamaro: Prints and Drawings' by Jack Hillier does not exist

With all of that as a starting point, I returned to the conversation today, and this is what ensued:

If you come away from reading that without reaching the conclusion that the bot spews fictitious nonsense, I suggest you get yourself off to a brain specialist at speed to have that fluff between your ears tested.

It's bad enough that it can't generate a halfway accurate response from whatever data it's been trained on previously. But it's also incapable of providing an accurate response using information that it's already generated and received within an active chat session. Trying to get consistent, accurate responses out of the bot is like trying to get the truth out of a compulsive liar; it's an exercise in futility.

That said, I did persevere for a while. The bot had repeated several times that the quote came from a previous user, but was that even a possibility? I mean, the bot had to know its own programming and capabilities, so asking about that was bound to get me a straight answer, right? Nope. I asked if it was possible for the bot to access a quotation given by a previous user and repeat it in an answer to a future user's question. I received seven different answers:

I can't help but conclude that ChatGPT was programmed to mimic a competent, accurate, credible writer or source rather than to be one. If the goal is to impress rather than inform - and it's not hard to imagine why its developers would be more concerned with the former - it would explain the bot's penchant for padding a poor response - for attempting to 'polish a turd', as they say. In its initial response to your question or command, it will produce a few respectable (if uninspiring) paragraphs for you to gawp at in awe. But, as accuracy and reliability don't appear to feature in what's 'impressive', any factual elements will most likely be utter tosh. As it reminded me several times, it checks nothing and is dependent on the calibre of the information fed to it. Well, they do say that we are what we eat.

If you ask, the bot will state that it cannot lie; it has no personal intent, so it cannot intend to deceive. However, when I asked it to explain further, it did confirm that, whilst it is 'not capable of lying in the same way that humans are', it can 'generate responses that are not true or accurate if [it is] programmed or trained to do so', and that its 'responses may sometimes include linguistic patterns that could be considered persuasive'. If there were to be such a thing as a dishonest human being who, with access to this or some other comparable AI bot, wanted to spread credible-sounding lies at high speed... Well, I'll leave that one with you to contemplate.